Google's Project Astra, Veo, and Gemini Upgrade Battle AI Advances

This is Google‘s response to OpenAI.

A general AI, an AI that can be truly used daily, it would be embarrassing to hold a press conference if it’s not like this now.

On the early morning of May 15, the annual “Spring Festival Gala of the Technology World” Google I/O Developers Conference officially began. How many times was artificial intelligence mentioned in the 110-minute main Keynote? Google has counted it up:

Yes, AI is being talked about every minute.

The competition of generative AI has recently reached a new climax, and the content of this I/O conference naturally revolves around artificial intelligence.

“A year ago on this stage, we first shared our plans for the native multimodal large model, Gemini. It marked the new generation of I/O,” Google CEO Sundar Pichai said. “Today, we hope everyone can benefit from Gemini’s technology. These groundbreaking features will penetrate into search, images, productivity tools, Android systems, and many other aspects.”

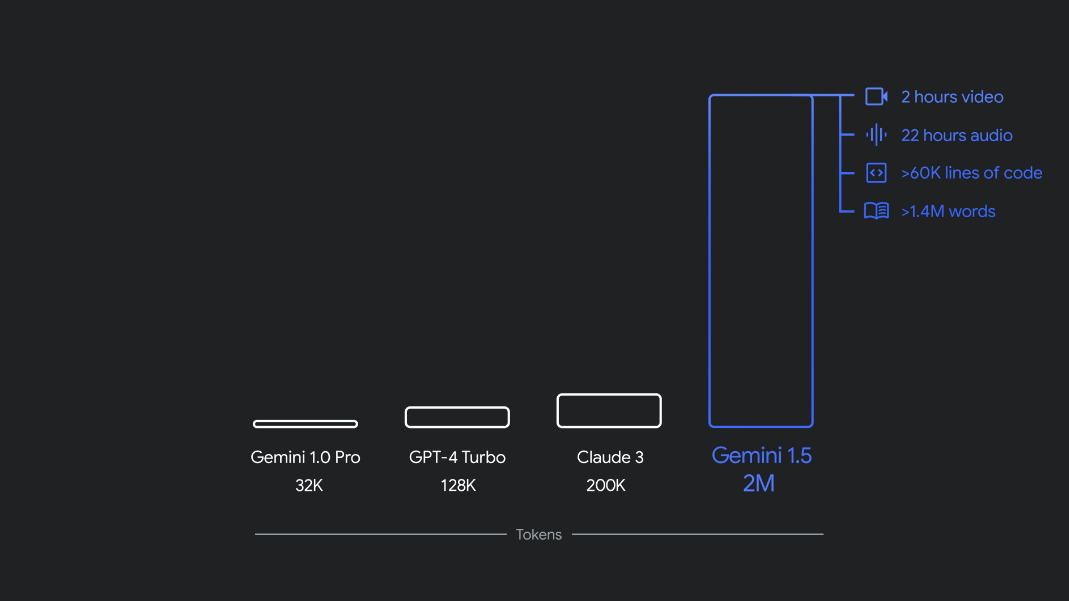

Currently, both 1.5 Pro and 1.5 Flash are available for public preview and offer a 1 million token context window in Google AI Studio and Vertex AI. Now, 1.5 Pro also provides a 2 million token context window for developers using the API and Google Cloud customers via a waiting list.

Additionally, Gemini Nano has been expanded from pure text input to image input. Later this year, starting with Pixel, Google will launch multimodal Gemini Nano. This means that mobile users can not only process text input but also understand more contextual information, such as visuals, sound, and spoken language.

The Gemini family welcomes a new member: Gemini 1.5 Flash

The new 1.5 Flash has been optimized for speed and efficiency.

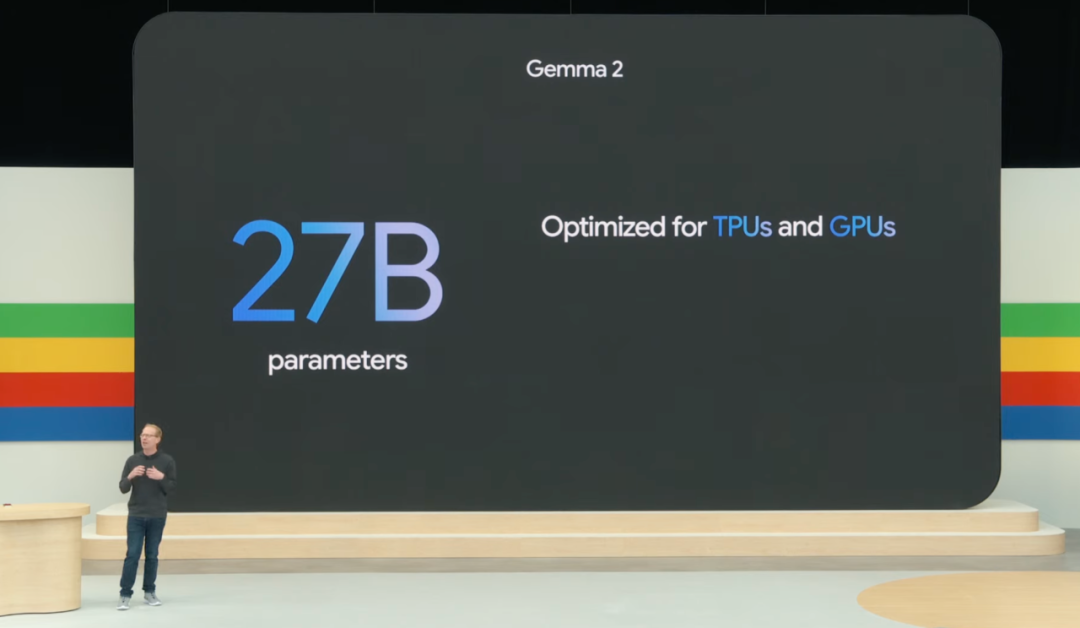

New Generation Open Source Large Model Gemma 2

Today, Google also released a series of updates to the open source large model Gemma – Gemma 2 is here.

As introduced, Gemma 2 utilizes a new architecture aimed at achieving groundbreaking performance and efficiency, the new open sourced model parameters are 27B.

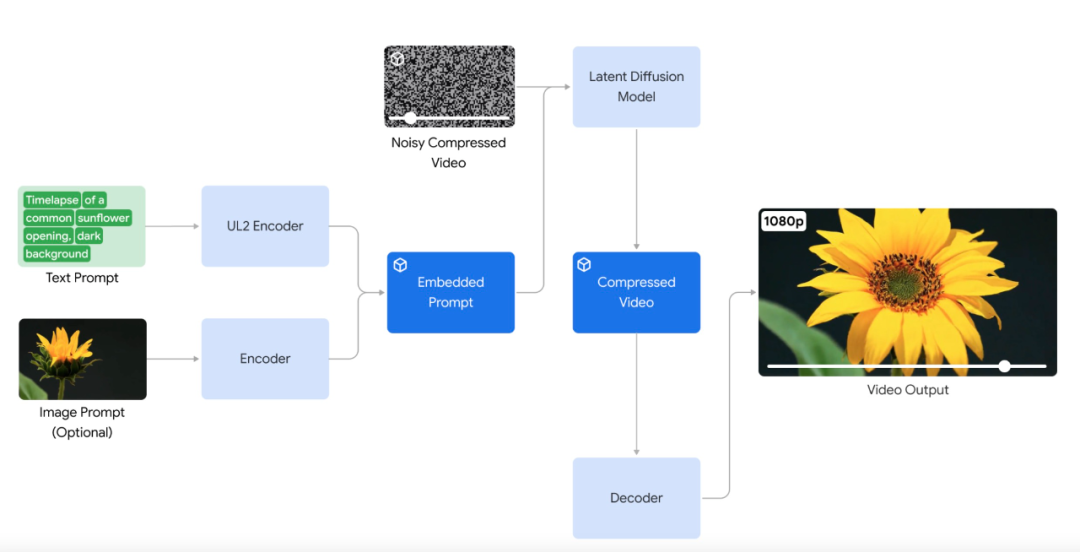

When it comes to long videos, Veo can produce videos of 60 seconds or even longer. It can do this through a single prompt or by providing a series of prompts that together tell a story. This is key for the application of video generation models in film and television production.

Veo is based on Google’s work in visual content generation, including Generative Query Network (GQN), DVD-GAN, Image-to-Video, Phenaki, WALT, VideoPoet, Lumiere, and others.